25-11记录

记录了每天都干了些啥

2025-11-28

VScode Extension

- Write an extension for VSCode, which can upload the picture while pasting it to markdown. Use a simple approach by integrating PicGo-Core. After configuring picgo, user just need to press ‘cmd + v’ to trige the automatic upload process. I learn the project structure of VSCode Extension development at the sametime.

- By the way, Claude-Opus-4.5 is really powerful. With a simple prompt:

develop an extention for vscode, which function is upload picture while paseting it in a markdown files. u just need calling 'picgo' tool in command line., and revised for twice, it run successfully. - Publish the extension to VScode marketplace, and sent a PR to Awesome-PicGo.

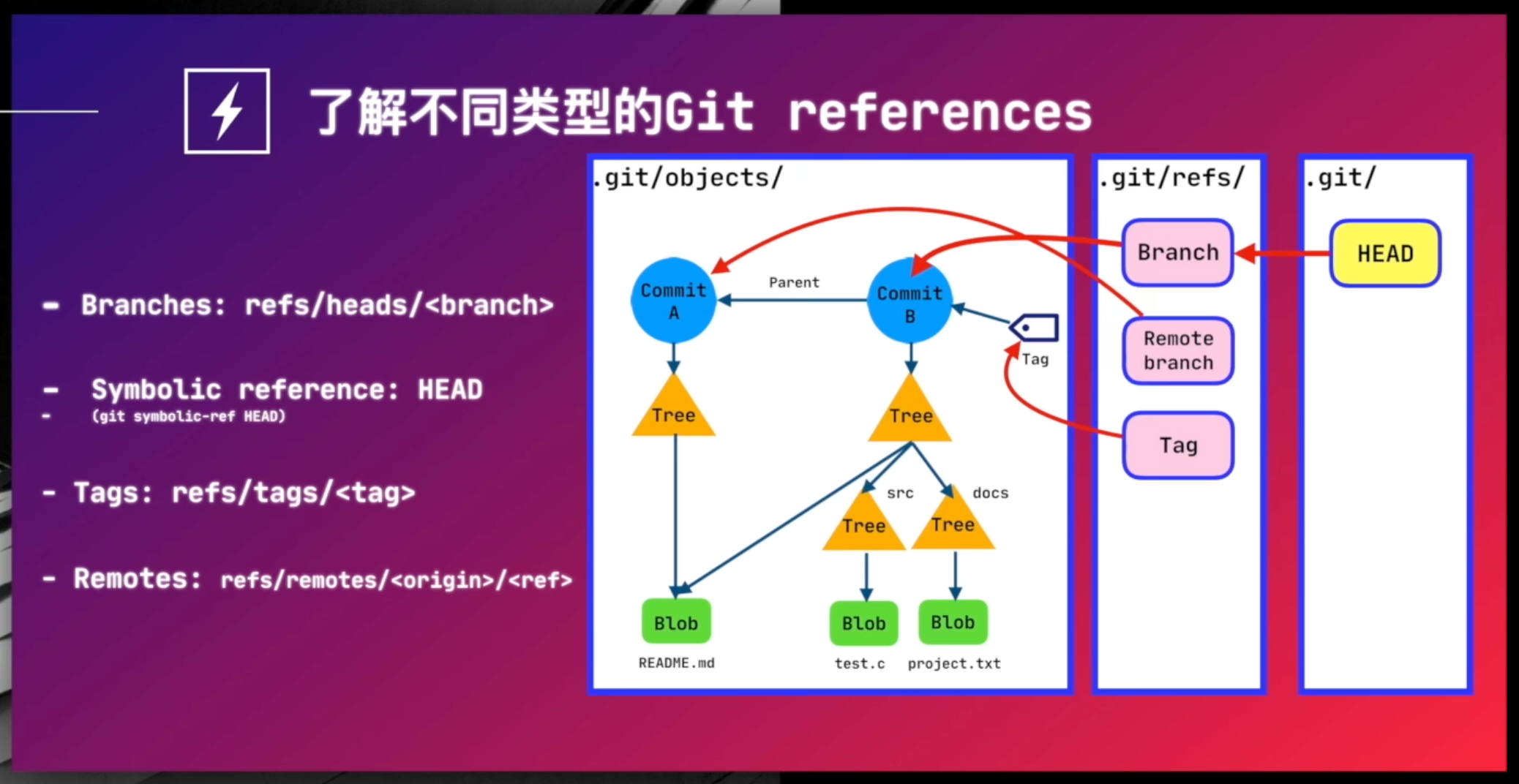

git

- Wasted a lot of time using git to manage my blog version. Fuck you

rebase, Fuck youversion conflict. It cost me at least one hour, and the problem still isn’t fixed: I committed a huge files and wanted to delete it. One hour later, everthing was back to the way it was ^_^ - I want learn basic operations by LLM, especially with Claude Opus 4.5 and geminic 3.0 pro. I hope I will understand the basic concept about git and grasp the basic abilitiles to use git manage version.

- Aliyun has course about git on bilibili, which is awesome!

It delve into the principles behind git, so that I can understand what the commands are actually doing.

It delve into the principles behind git, so that I can understand what the commands are actually doing.

2025-11-30

llm-course

llm-course , provided by HuggingFace, is incredibly beginner-friendly — one of the most accessible, comprehensive, and sufficiently in-depth courses for anyone who wants solid knowledge of LLMs and practical techniques for working with Transformers.

A practical study path could be: (1) Textbook: Deep Learning from Scratch — for basic DL foundations -> (2) Online course: happy-llm — covers the basics and gives a comprehensive overview of LLMs; simpler than HuggingFace’s llm-course -> (3) Online course: llm-course — deeper, very readable, and builds everything from scratch. Truly a “best-of-everything” course that’s a pleasure to read. Dives into many concepts that are skipped in happy-llm.

llm-course also gives many links to deeper resources and documentations, for example, Advanced cookbook, Dataset performence, Transformers performence.

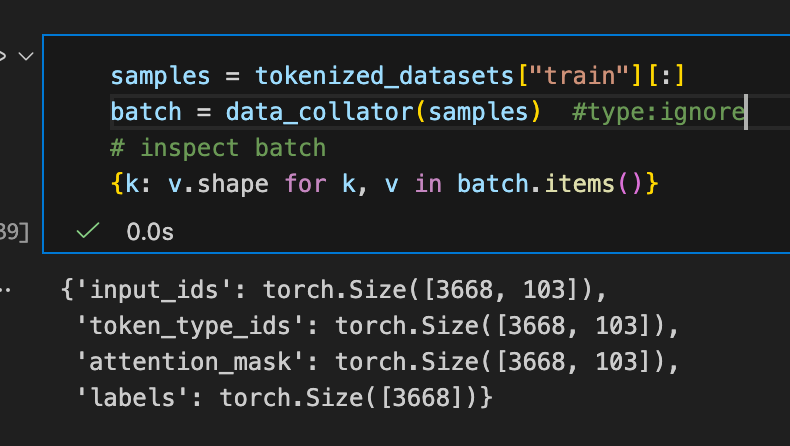

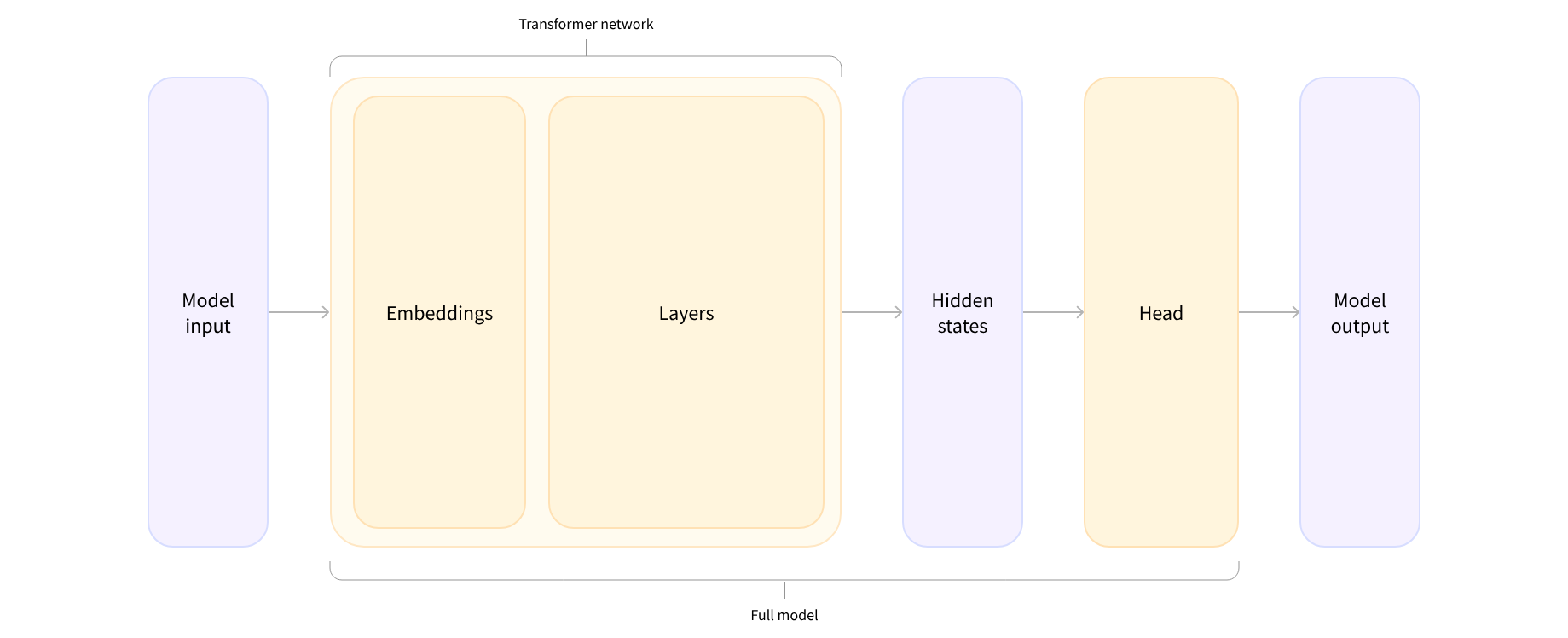

llm-course Chapter 2: Using Transformers

- Head: The base model output a hidden state, which need ‘Head’ module transform it to final output.

- Multiple sequences:

1 2 3 4 5 6

tokens = tokenizer( sequences, padding=True, truncation=True, return_tensors="pt" # return tensor type as pytorch. )

- Optimizing inference deplyment: FlashAttention in TGI, PagedAttention in vLLM, and lamma.cpp.

- Tokenizer:

1 2 3 4 5

tokenizer = AutoTokenizer.from_pretrain(checkpoint) model_inputs = tokenizer(sequences) # Here, tokenizer is called as a class, it use '__call__' magic method in python. # It's similar to writing: AutoTokenizer(checkpoint)(sequences)